BigFile Lightpaper

Latest Update: January 7, 2025

1. Introduction

Traditional blockchain systems face significant challenges when it comes to storing data in a permanent, on-chain manner. High storage costs and scalability barriers often force reliance on external or centralized solutions, undermining the long-term security and sustainability of decentralized networks.

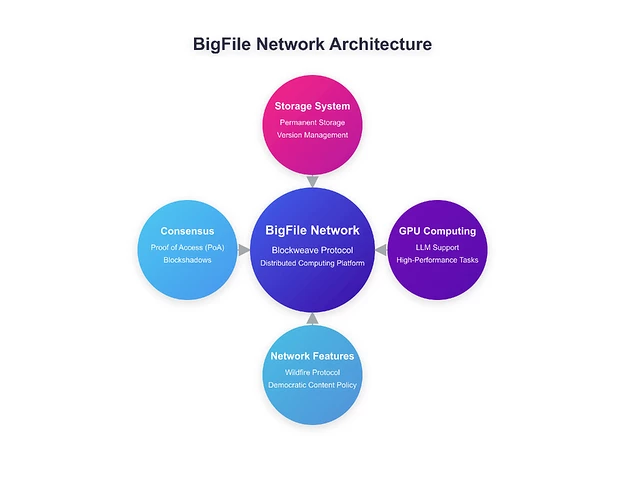

BigFile addresses this issue by leveraging a blockweave architecture forked from the Arweave Protocol. This design ensures data is stored permanently, cost-effectively, and in a decentralized manner. Moreover, BigFile introduces the capability to perform GPU-powered mining to support Large Language Models (LLMs) and other compute-intensive tasks, transforming the network into an energy-efficient and highly scalable distributed computing platform, rather than merely focusing on storage and token transfers.

Key Features

- Blockweave Architecture: Eliminates the need to hold all historical blocks, enabling fast verification and reduced synchronization overhead.

- Proof of Access (PoA): Requires miners to prove access to previously stored data, integrating storage utility directly into the consensus mechanism.

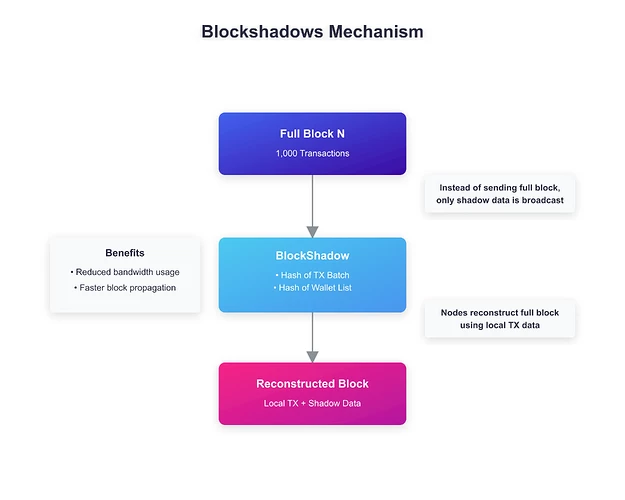

- Blockshadows: Distributes only essential “shadow” information when broadcasting new blocks, minimizing bandwidth usage and improving throughput.

- Wildfire: Encourages rapid data sharing among nodes, boosting network cohesion and punishing uncooperative peers.

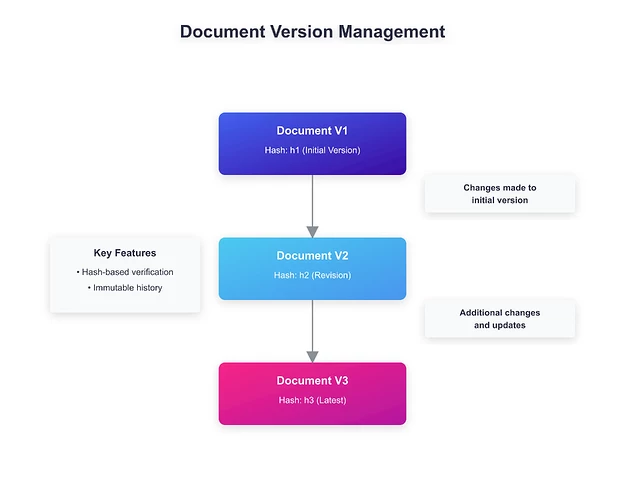

- Version Management: Maintains an immutable version history of documents for transparency and auditability.

- Democratic Content Policy: Empowers nodes to locally filter objectionable content without compromising the network’s decentralized nature.

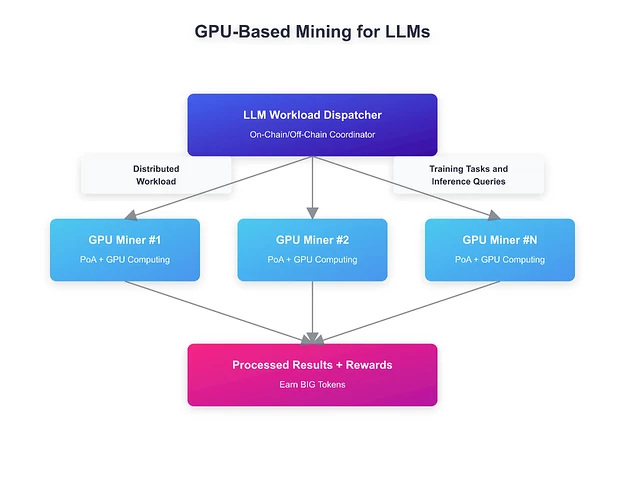

- GPU-Accelerated Mining: Enables participants to provide both storage and computational power (e.g., for LLM workloads), incentivizing a broader range of network functions.

This Lightpaper outlines BigFile’s core design principles, architecture, and target use cases, including its extended capabilities for high-performance computing scenarios.

2. Background and Motivation

2.1 The Storage Conundrum

While blockchains such as Ethereum offer immutable, on-chain data storage, the associated costs (e.g., high gas fees) limit large-scale adoption. Meanwhile, Arweave offers a more economical model. Building upon Arweave’s strengths, BigFile further enhances scalability and flexibility, catering to use cases that span corporate archiving, bureaucratic processes, and compute-intensive AI applications.

2.2 Automating Bureaucratic Processes

Bureaucracy in its conventional form is bogged down by paperwork and costly verification processes. BigFile’s transparent, auditable smart contract framework automates and accelerates these workflows. Combined with Version Management, every update or modification to a document is permanently recorded, reinforcing trust and reducing administrative overhead.

2.3 LLM Workloads and Distributed GPU Utilization

Machine Learning, particularly LLMs, demands massive computation power and large datasets. Current centralized solutions can be expensive and environmentally taxing. BigFile introduces GPU-based mining to decentralize these resource requirements, enabling miners to offer GPU cycles for AI workloads in return for additional incentives. This approach may also reduce reliance on large, centralized data centers by capitalizing on globally distributed GPU resources.

3. Core Technologies

3.1 Blockweave

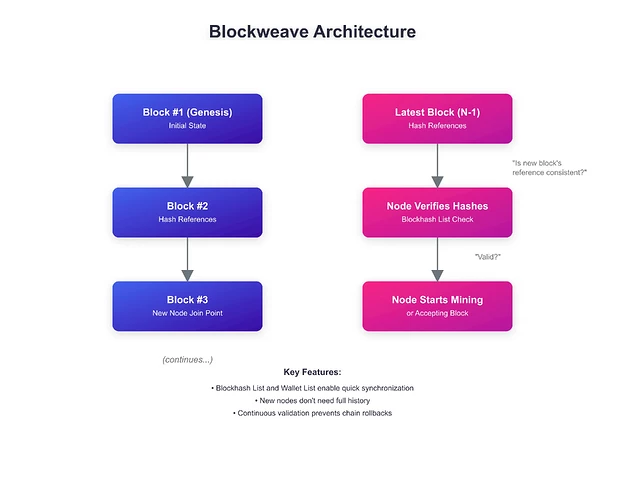

In conventional blockchains (e.g., Bitcoin, Ethereum), each node must store the entire chain. By contrast, Blockweave allows nodes to:

- Blockhash and Wallet Lists: Only download essential metadata (e.g., a hash list of prior blocks and a list of active wallets) to join the network, eliminating the need to sync all historical blocks.

- Continuous Validation: Even without fully verifying blocks from genesis, miners can still maintain network integrity, thanks to hash references in every new block that protect against rollback attacks.

This architecture significantly lowers the barrier to node participation and improves scalability.

3.2 Proof of Access (PoA)

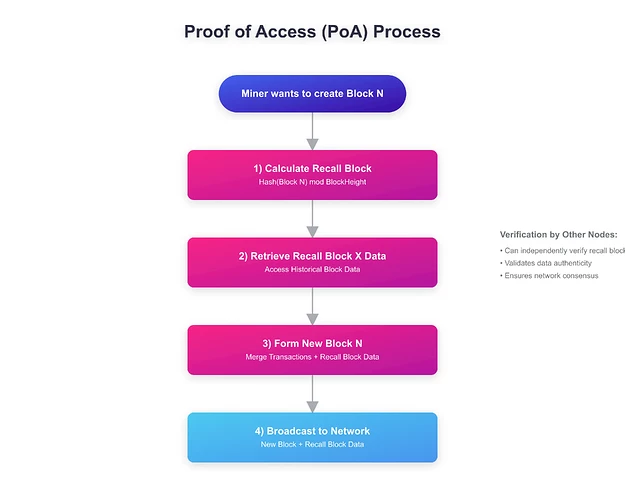

BigFile combines PoW (Proof of Work) with Proof of Access. To produce a new block, miners must reference (“recall”) data from a randomly selected previous block:

- Recall Block Selection: Determined by taking the current block’s hash and applying it modulo the current block height.

- Data Validation: Transactions from the recall block are merged with new transactions to form the next block. This ensures miners have genuine access to historical data.

- Independent Verification: Because the recall block data is broadcast alongside the new block, nodes lacking the recall block can still validate it.

By making data retrieval integral to block production, BigFile ensures a persistent and secure storage layer.

3.3 Wildfire

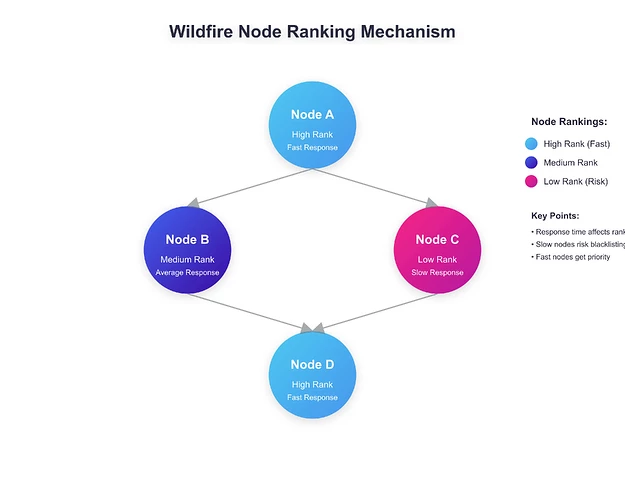

Wildfire incentivizes prompt data exchange among nodes:

- Local Ranking: Each node scores its peers based on how quickly they fulfill data requests.

- Blacklist Mechanism: Nodes that are slow or refuse to share data risk blacklisting and potential exclusion.

- Cooperative Environment: High-scoring nodes earn greater mining advantages, ensuring the network’s overall health and performance.

3.4 Blockshadows

Instead of broadcasting the entire block, Blockshadows transmit only minimal data (the “shadow”) essential for reconstructing the full block:

- Minimal Overhead: The shadow typically contains hashes for wallet lists and transactions, not the entire transaction set.

- High Throughput: As long as nodes possess matching transaction data, block assembly is rapid.

- Scalability: Under ideal network conditions (100 Mbps), the system can theoretically handle ~5,000 TPS, helping maintain lower transaction fees even during network peaks.

3.5 Version Management

Every document stored on the blockweave is tracked across all historical versions:

- Traceability: Each version points to the previous one, forming an immutable chain of updates.

- Unalterable Records: Any attempt to manipulate or erase previous versions is immediately detectable, reinforcing trust and accountability.

3.6 Democratic Content Policy

BigFile aims for full decentralization while respecting cultural and legal standards:

- Local Blacklists: Each node decides which content to store or filter, based on local laws or community norms.

- Aggregate Rejection: If more than 50% of nodes blacklist specific content, it effectively becomes excluded from most of the network.

- Minority Protections: Content lacking a universal majority against it remains accessible, aligning with the principle of freedom of information.

3.7 GPU-Based Mining for LLMs

A standout feature of BigFile is its support for compute-intensive tasks, such as Large Language Model training and inference:

- Dual Incentives: Miners earn rewards both from storing data (PoA) and contributing GPU cycles for AI processes.

- Distributed Training & Inference: Leveraging globally dispersed GPU resources reduces single-point data center dependencies.

- Energy Efficiency: Decentralizing GPU usage can optimize energy consumption versus monolithic data centers.

- Smart Contract Integration: On-chain LLM models can interface with BigFile’s smart contracts—for instance, gating LLM outputs behind token payments or automatically executing state changes based on AI-inferred insights.

4. Ecosystem and Application Development

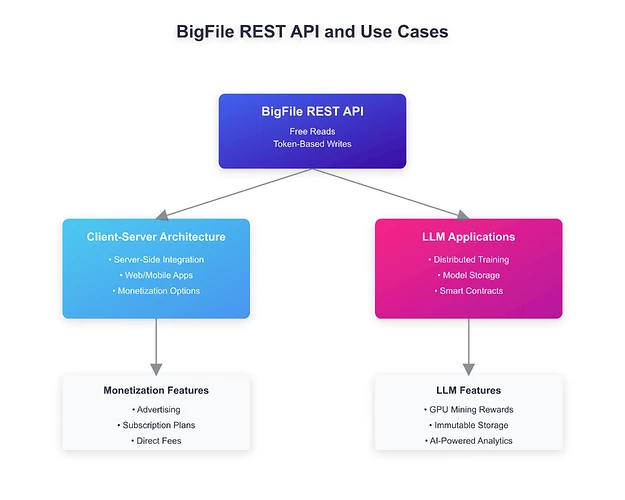

4.1 BigFile REST API

BigFile offers a REST API for reading and writing data, making it straightforward to build both web-based and local applications:

- Free Reads: Data retrieval (reads) from the network incurs no BIG token cost.

- Token-Based Writes: Developers pay fees in BIG tokens to add new data, ensuring a secure and spam-free network.

4.2 Architectures and Use Cases

4.2.1 Client-Server

- Server-Side Integration: Web or mobile applications can proxy user interactions with the BigFile network via a backend server.

- Scalable Monetization: Options include advertising, subscription plans, or direct fees for data services.

4.2.2 LLM Applications

- Distributed Model Training: GPU-enabled miners can join training projects, earning proportional rewards based on contributed compute.

- On-Chain Model Storage: LLM weight files (and updates) can be stored immutably on the blockweave, ensuring integrity and verifiability.

- Intelligent Smart Contracts: Leverage AI outputs for advanced use cases, such as document fraud detection or large-scale data analytics, with logic triggered on-chain.

5. Economic Model and Incentives

In the BigFile ecosystem, BIG tokens serve as the core utility for writing data, mining rewards, and executing LLM computations.

5.1 Token Mechanics

- Transaction Fees: Users need BIG tokens to submit new data to the network.

- Mining Rewards: PoA and GPU mining participants receive block rewards plus a share of transaction fees.

- Storage Incentives: As node participation grows, storage costs per unit of data decrease, reinforcing perpetual data preservation.

5.2 Long-Term Sustainability

- Value Appreciation: Greater data ingestion and AI workloads translate into heightened demand for BIG tokens.

- Community Engagement: More nodes and applications enhance the security, utility, and overall value of the ecosystem.

Conclusion

BigFile is a multi-faceted platform that delivers permanent, cost-effective storage, fast and scalable transaction confirmation, automated bureaucracy, and decentralized AI computing via GPU integration:

- Comprehensive Storage: Harnessing Arweave-based blockweave guarantees tamper-proof permanence at sustainable costs.

- Scalability and Speed: Blockshadows and Wildfire minimize network load, facilitating higher throughput and lower latency.

- Robust Security: Proof of Access integrates data preservation into core consensus, safeguarding the network’s integrity.

- AI-Ready: GPU support positions BigFile to handle compute-intensive tasks such as LLM training and inference, expanding traditional blockchain boundaries.

- Democratic Governance: The network’s decentralized content policy balances free information flow with respect for community standards.

BigFile is not merely another blockchain project. It aims to become the next-generation ecosystem for data storage, bureaucracy optimization, and distributed AI, inviting developers and organizations to explore innovative use cases and actively shape the network’s evolution.

Note: For more in-depth technical documentation and development guides, refer to BigFile’s official GitHub repositories and community resources.